The challenge

At Apex Lab, we understand the importance of performance in the realm of serverless computing. As an essential component of our tech stack, AWS Lambda offers remarkable scalability and cost-efficiency advantages. However, we have frequently encountered a challenge known as the “cold start duration.”

Cold starts in AWS Lambda can lead to extended response times, resulting in a subpar user experience, especially during periods of high load when AWS scales out and generates new execution environments.

Why lambda sizes are important?

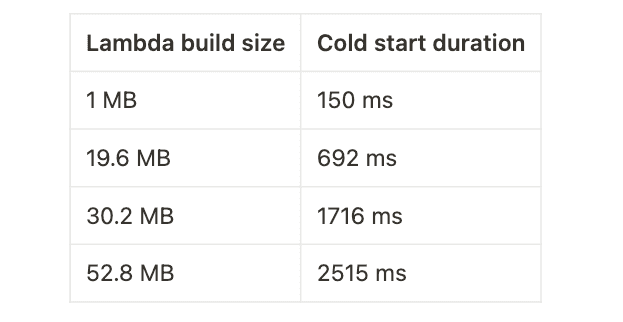

Our test results illustrate a direct correlation between lambda build size and cold start duration. As lambda build size increases, cold start duration also extends. This correlation underlines the importance of lambda build size optimization to reduce cold start latency and boost overall performance.

Our mission is to minimize cold start duration and improve user experience. Optimizing your lambda build size is one of the most effective practices we recommend.

Here are some examples of how to optimize your lambda imports:

// Instead of const AWS = require('aws-sdk'), use:

const DynamoDB = require('aws-sdk/clients/dynamodb')

// Instead of const AWSXRay = require('aws-xray-sdk'), use:

const AWSXRay = require('aws-xray-sdk-core')

// Instead of const AWS = AWSXRay.captureAWS(require('aws-sdk')), use:

const dynamodb = new DynamoDB.DocumentClient()

AWSXRay.captureAWSClient(dynamodb.service)Our solution

To address these challenges, we conducted extensive testing and data collection. We developed a powerful node module called Serverless Icebreaker to enhance user experience while optimizing our serverless applications.

Serverless Icebreaker is specifically engineered to optimize AWS Lambda’s cold start duration. After building your Lambda functions, this tool thoroughly analyzes their sizes and provides insights into optimization techniques that can reduce cold start times. By performing a detailed examination, it identifies unoptimized imports and generates comprehensive error and warning reports.

➜ serverless-icebreaker: npx sib

✅ simple-lambda.mjs

✅ optimized.mjs

🚧 unoptimized.mjs

Lambda size: 18.72 MB

Imported modules: 5

Possible Cold Start Duration: ~661 ms

Most frequent modules: {"aws-sdk":"96.87%","xmlbuilder":"2.52%","xml2js":"0.46%"}

❌ get-long-cold-start.mjs

Lambda size: 50.34 MB

Imported modules: 18

Possible Cold Start Duration: ~2398 ms

Most frequent modules: {"aws-cdk-lib":"57.54%","aws-sdk":"37.87%","aws-xray-sdk-core":"2.60%"}

📊 Metrics:

Number of lambdas: 4

Errors and Warnings: 2

Error threshold: 20 MB

Warning threshold: 18 MB

Average lambda size: 25.17 MB

Largest lambda size: 50.34 MB

Smallest lambda size: 816 byte

✨ Done in 1.69s.Seamless integration into your development pipeline is a key design principle. You can use it with pre-commit hooks or include it in your GitHub CI/CD workflow. The tool alerts you to errors and warnings and even offers predictions about cold start durations, empowering you to address these issues proactively before deployment.

Results

Implementing Serverless Icebreaker into our development workflow has resulted in significant efficiency improvements in our serverless applications. We have observed considerable reduction in cold start times of our AWS Lambda functions. This is especially apparent during peak load times when the AWS auto-scale feature triggers the creation of new execution environments.

Moreover, Serverless Icebreaker has simplified the process of identifying and resolving performance issues for our engineers. The detailed reports generated by the tool allow our team to spot unoptimized imports and other potential problems that could hamper the performance of our Lambdas. Furthermore, the predictive feature provides us with vital foresight, enabling us to make changes to improve performance proactively.

// Detailed report

{

"timeStamp": "13.07.23. 17:20",

"metrics": {

"numberOfLambdas": 5,

"averageLambdaSize": "25.17 MB",

"largestLambdaSize": "50.34 MB",

"smallestLambdaSize": "816 byte"

},

"lambdasWithErrors": [

{

"lambdaName": "get-long-cold-start.mjs",

"possibleColdStartDuration": "~2398 ms",

"lambdaSize": "50.34 MB",

"importedModules": 18,

"mostFrequentModules": {

"aws-cdk-lib": "57.54%", "aws-sdk": "37.87%", "aws-xray-sdk-core": "2.60%"

}

}

],

"lambdasWithWarnings": [

{

"lambdaName": "unoptimized.mjs",

"possibleColdStartDuration": "~661 ms",

"lambdaSize": "18.72 MB",

"importedModules": 5,

"mostFrequentModules": {

"aws-sdk": "96.87%", "xmlbuilder": "2.52%", "xml2js": "0.46%"

}

}

],

"acceptableLambdas": [

{ "..." },

{ "..." },

{ "..." }

]

}## Pipeline mode: use it in a GitHub action workflow

name: PR checks

on: pull_request

jobs:

lint-and-test:

runs-on: ubuntu-latest

strategy:

matrix:

node-version: [16.x]

steps:

- uses: actions/checkout@v2

- name: Use Node.js ${{ matrix.node-version }}

uses: actions/setup-node@v1

with:

node-version: ${{ matrix.node-version }}

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.FEATURE_AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.FEATURE_AWS_SECRET_ACCESS_KEY }}

aws-region: eu-central-1

- uses: c-hive/gha-yarn-cache@v2

- name: build & lint & test

run: |

yarn install

yarn build

## pipeline mode

yarn sib --pipeline

yarn lint

yarn test

env:

CI: trueBehind the scenes

Our team of four - 2 engineers, a tech lead, and a pm - collaborated closely on Serverless Icebreaker. The project challenged us to broaden our comfort zones and contributed to our professional growth in unique ways. We made it even the 6th place of Product Hunt!

“Serverless Icebreaker has greatly simplified our workflow. We no longer have to guess our way through performance issues. Now, we have exact metrics and insights to guide our optimization efforts.”

Zoltán Full-stack Engineer

“I appreciate how Serverless Icebreaker has made our application more robust and efficient. But more than that, working on this tool has been an incredible learning experience.”

Attila Full-stack Enginee

“This project started with a single idea shared in a Slack message. Seeing it transform into a tool that not only benefits our team but the entire AWS Lambda community, has been incredibly rewarding.”

Bence Tech Lead

“Balancing learning and delivery has been my biggest challenge as a project manager. But Serverless Icebreaker provided a unique opportunity for me to delve into the world of development tools, and I’ve learned a lot from this experience.”

Réka PM

Serverless Icebreaker is a testament to the achievements of a dedicated, small team. It’s not just a tool, it’s a result of hard work, learning, and commitment to improving serverless computing.

To wrap it up

If you’re building serverless applications, start optimizing your AWS Lambda functions today with Serverless Icebreaker.

Your feedback and contribution is invaluable for us! For any queries, feel free to contact us on our Q&A platform. You can also report issues or request features through our GitHub page.

Happy lambda-catching! 👋